Absolute 0 CCP: Use DeepSeek R1 with GPU TEE for Verified AI Security

2025-02-07

Recent discussions in the media have raised concerns about data privacy and security in AI models developed overseas. With articles in The Independent, Reuters, and The Guardian drawing attention to data handling practices, it’s clear that verifying model security is essential. If you’ve ever thought, “Don’t trust Chinese AI models? Verify by yourself,” you’re in good company.

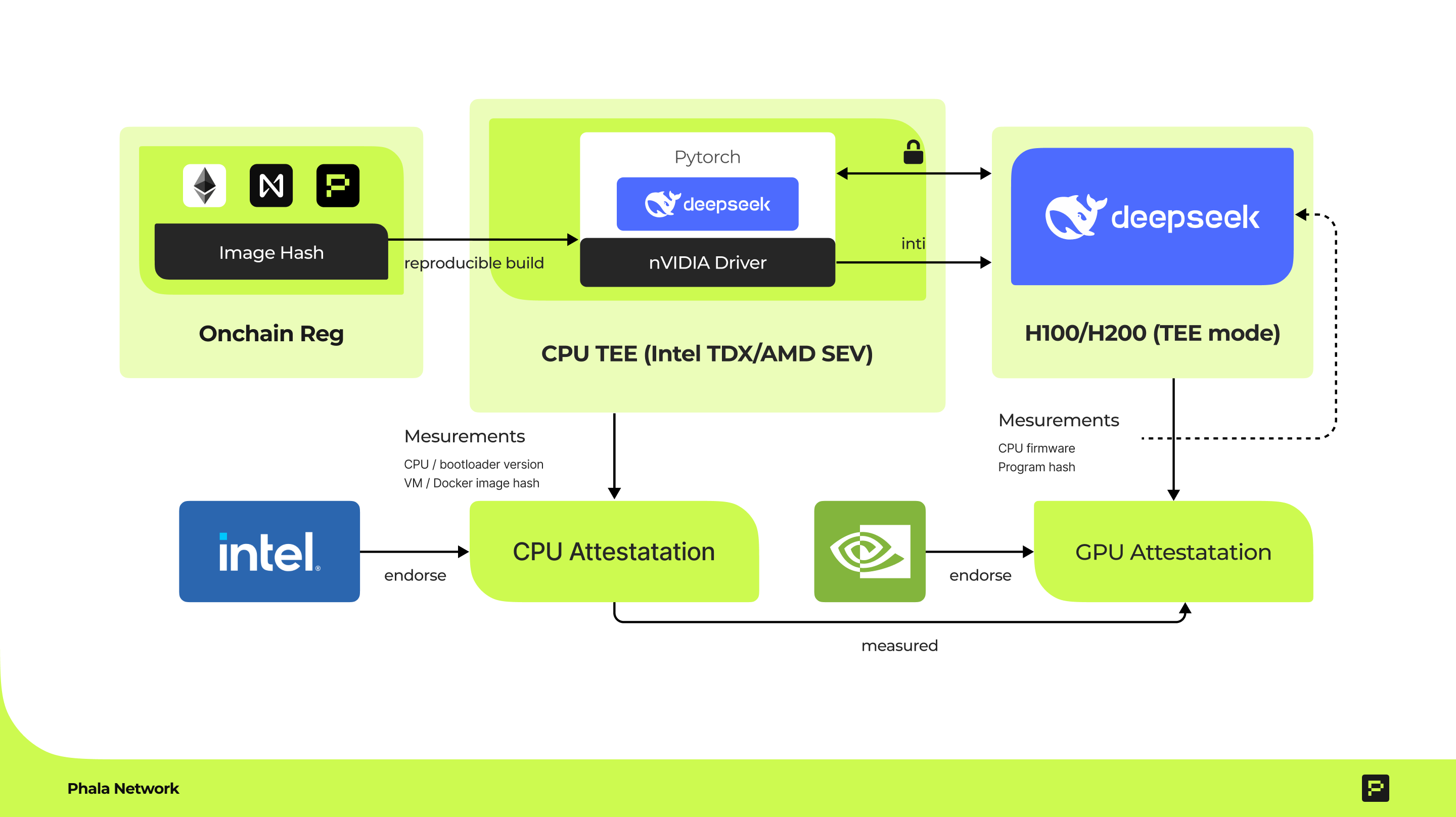

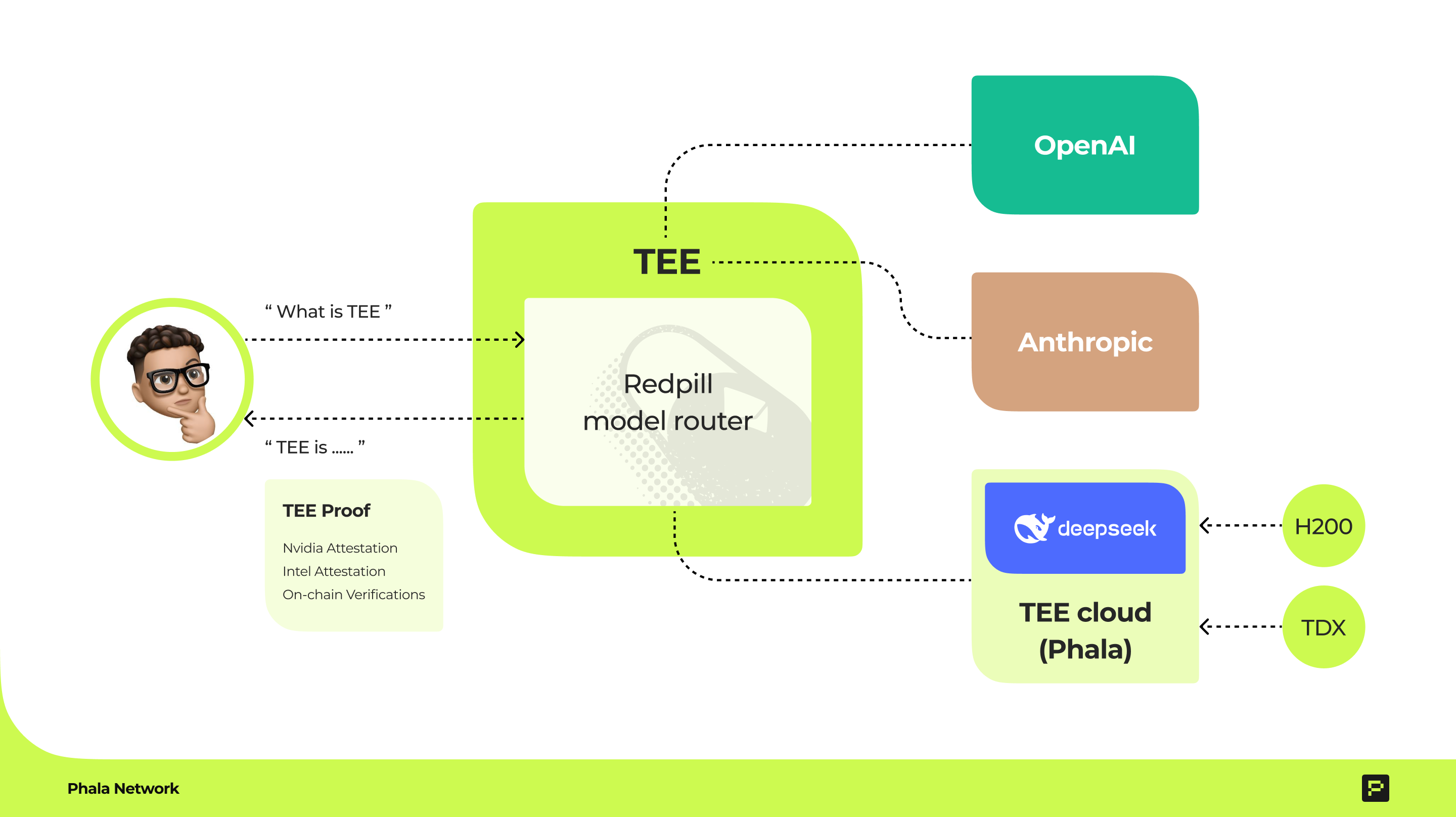

One solution is to leverage the security features provided by Phala Network TEE teach stack. With the TEE tech stack combining Redpill and private-ml-sdk, you can run AI models like DeepSeek R1 in a TEE, ensuring that data processing is conducted in a secure, isolated space.

Developers and security-conscious users now have a practical way to run inference using the Phala tech stack while verifying that their data remains protected.

Running DeepSeek in a TEE with the Private-ML-SDK

The technical backbone behind this secure deployment is the private-ml-sdk, a SDK co-developed by Nearai and Phala. This SDK provides the tools needed to host and run AI models like DeepSeek R1 inside a TEE for secure inference. Here’s a concise guide on how it works:

- Access the Model:

Start by exploring DeepSeek R1 via Redpill at DeepSeek R1 on Phala.

- Setup and Run:

Follow the private-ml-sdk steps to host and run the model inside a TEE. This involves setting up your environment, compiling the SDK, configuring the model, and launching the secure server.

- Verify Security:

Use the generated remote attestation reports to confirm that your model is running securely. This verification process ensures that the data and computations are safely isolated.

- Run Inference:

Connect your client application to send queries and receive responses—all processed within a trusted environment.

By following these steps, developers can validate the DeepSeek R1 model and apply the same secure, verifiable inference process to any model they choose using the Phala tech stack.

Accessing DeepSeek R1 Through TEE Cloud

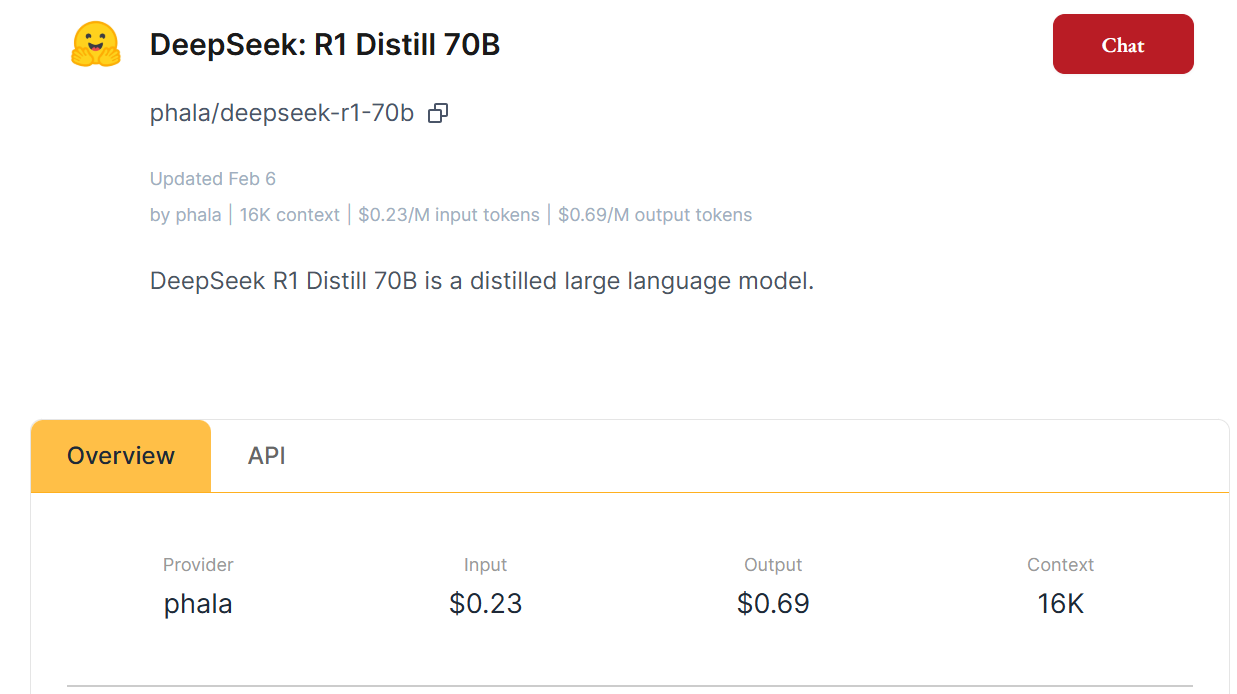

Introducing Redpill – a secure cloud service that uses TEE to run LLMs like DeepSeek with end-to-end privacy. Data is encrypted before entering the TEE, processed securely in isolation, and returned over a secure channel, with remote attestation ensuring the TEE's integrity. For those ready to experiment, test the DeepSeek R1 Distill 70B parameter model directly via the Redpill website.

Disclaimer: This is a R1 distilled version, as there are temporary compute constraints when running larger model such as full R1 (660b model) or V3 Deepseek models via private-ml-sdk.

Closing Thoughts

Running AI models like DeepSeek R1 in a TEE using Phala’s infrastructure and the private-ml-sdk provides a reliable method for secure inference. With direct API access via Redpill and a clear set of technical steps to host and verify your model using private-ml-sdk, developers have a powerful option to protect sensitive data and build trust in AI services.

Stay secure, test confidently, and verify your AI’s privacy protection with Phala TEE tach stack.